hbase-hdfs-cycling-data

Install this demo on an existing Kubernetes cluster:

$ stackablectl demo install hbase-hdfs-load-cycling-data| This demo should not be run alongside other demos. |

System requirements

To run this demo, your system needs at least:

-

3 cpu units (core/hyperthread)

-

6GiB memory

-

16GiB disk storage

Overview

This demo will

-

Install the required Stackable operators.

-

Spin up the following data products:

-

HBase: An open source distributed, scalable, big data store. This demo uses it to store the cyclist dataset and enable access.

-

HDFS: A distributed file system used to intermediately store the dataset before importing it into HBase

-

-

Use distcp to copy a cyclist dataset from an S3 bucket into HDFS.

-

Create HFiles, a File format for hBase consisting of sorted key/value pairs. Both keys and values are byte arrays.

-

Load Hfiles into an existing table via the

Importtsvutility, which will load data inTSVorCSVformat into HBase. -

Query data via the

hbaseshell, which is an interactive shell to execute commands on the created table

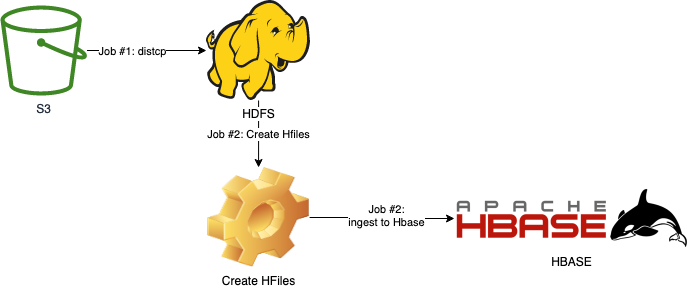

You can see the deployed products and their relationship in the following diagram:

Listing the deployed Stackable services

To list the installed Stackable services run the following command: stackablectl stacklet list

$ stackablectl stacklet list

┌───────────┬───────────┬───────────┬──────────────────────────────────────────────────────────────┬─────────────────────────────────┐

│ PRODUCT ┆ NAME ┆ NAMESPACE ┆ ENDPOINTS ┆ CONDITIONS │

╞═══════════╪═══════════╪═══════════╪══════════════════════════════════════════════════════════════╪═════════════════════════════════╡

│ hbase ┆ hbase ┆ default ┆ regionserver 172.18.0.2:31521 ┆ Available, Reconciling, Running │

│ ┆ ┆ ┆ ui-http 172.18.0.2:32064 ┆ │

│ ┆ ┆ ┆ metrics 172.18.0.2:31372 ┆ │

├╌╌╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌┤

│ hdfs ┆ hdfs ┆ default ┆ datanode-default-0-listener-data 172.18.0.2:31990 ┆ Available, Reconciling, Running │

│ ┆ ┆ ┆ datanode-default-0-listener-http http://172.18.0.2:30659 ┆ │

│ ┆ ┆ ┆ datanode-default-0-listener-ipc 172.18.0.2:30678 ┆ │

│ ┆ ┆ ┆ datanode-default-0-listener-metrics 172.18.0.2:31531 ┆ │

│ ┆ ┆ ┆ namenode-default-0-http http://172.18.0.2:32543 ┆ │

│ ┆ ┆ ┆ namenode-default-0-metrics 172.18.0.2:30098 ┆ │

│ ┆ ┆ ┆ namenode-default-0-rpc 172.18.0.2:30915 ┆ │

│ ┆ ┆ ┆ namenode-default-1-http http://172.18.0.2:31333 ┆ │

│ ┆ ┆ ┆ namenode-default-1-metrics 172.18.0.2:30862 ┆ │

│ ┆ ┆ ┆ namenode-default-1-rpc 172.18.0.2:31440 ┆ │

├╌╌╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌┤

│ zookeeper ┆ zookeeper ┆ default ┆ ┆ Available, Reconciling, Running │

└───────────┴───────────┴───────────┴──────────────────────────────────────────────────────────────┴─────────────────────────────────┘|

When a product instance has not finished starting yet, the service will have no endpoint. Depending on your internet connectivity, creating all the product instances might take considerable time. A warning might be shown if the product is not ready yet. |

Loading data

This demo will run two jobs to automatically load data.

distcp-cycling-data

DistCp (distributed copy) efficiently transfers large amounts of data from one location to another. Therefore, the first Job uses DistCp to copy data from a S3 bucket into HDFS. Below, you’ll see parts from the logs.

Copying s3a://public-backup-nyc-tlc/cycling-tripdata/demo-cycling-tripdata.csv.gz to hdfs://hdfs/data/raw/demo-cycling-tripdata.csv.gz

[LocalJobRunner Map Task Executor #0] mapred.RetriableFileCopyCommand (RetriableFileCopyCommand.java:getTempFile(235)) - Creating temp file: hdfs://hdfs/data/raw/.distcp.tmp.attempt_local60745921_0001_m_000000_0.1663687068145

[LocalJobRunner Map Task Executor #0] mapred.RetriableFileCopyCommand (RetriableFileCopyCommand.java:doCopy(127)) - Writing to temporary target file path hdfs://hdfs/data/raw/.distcp.tmp.attempt_local60745921_0001_m_000000_0.1663687068145

[LocalJobRunner Map Task Executor #0] mapred.RetriableFileCopyCommand (RetriableFileCopyCommand.java:doCopy(153)) - Renaming temporary target file path hdfs://hdfs/data/raw/.distcp.tmp.attempt_local60745921_0001_m_000000_0.1663687068145 to hdfs://hdfs/data/raw/demo-cycling-tripdata.csv.gz

[LocalJobRunner Map Task Executor #0] mapred.RetriableFileCopyCommand (RetriableFileCopyCommand.java:doCopy(157)) - Completed writing hdfs://hdfs/data/raw/demo-cycling-tripdata.csv.gz (3342891 bytes)

[LocalJobRunner Map Task Executor #0] mapred.LocalJobRunner (LocalJobRunner.java:statusUpdate(634)) -

[LocalJobRunner Map Task Executor #0] mapred.Task (Task.java:done(1244)) - Task:attempt_local60745921_0001_m_000000_0 is done. And is in the process of committing

[LocalJobRunner Map Task Executor #0] mapred.LocalJobRunner (LocalJobRunner.java:statusUpdate(634)) -

[LocalJobRunner Map Task Executor #0] mapred.Task (Task.java:commit(1421)) - Task attempt_local60745921_0001_m_000000_0 is allowed to commit now

[LocalJobRunner Map Task Executor #0] output.FileOutputCommitter (FileOutputCommitter.java:commitTask(609)) - Saved output of task 'attempt_local60745921_0001_m_000000_0' to file:/tmp/hadoop/mapred/staging/stackable339030898/.staging/_distcp-1760904616/_logs

[LocalJobRunner Map Task Executor #0] mapred.LocalJobRunner (LocalJobRunner.java:statusUpdate(634)) - 100.0% Copying s3a://public-backup-nyc-tlc/cycling-tripdata/demo-cycling-tripdata.csv.gz to hdfs://hdfs/data/raw/demo-cycling-tripdata.csv.gzcreate-hfile-and-import-to-hbase

The second Job consists of 2 steps.

First, we use org.apache.hadoop.hbase.mapreduce.ImportTsv (see ImportTsv Docs) to create a table and Hfiles.

Hfile is an HBase dedicated file format which is performance optimized for HBase.

It stores meta-information about the data and thus increases the performance of HBase.

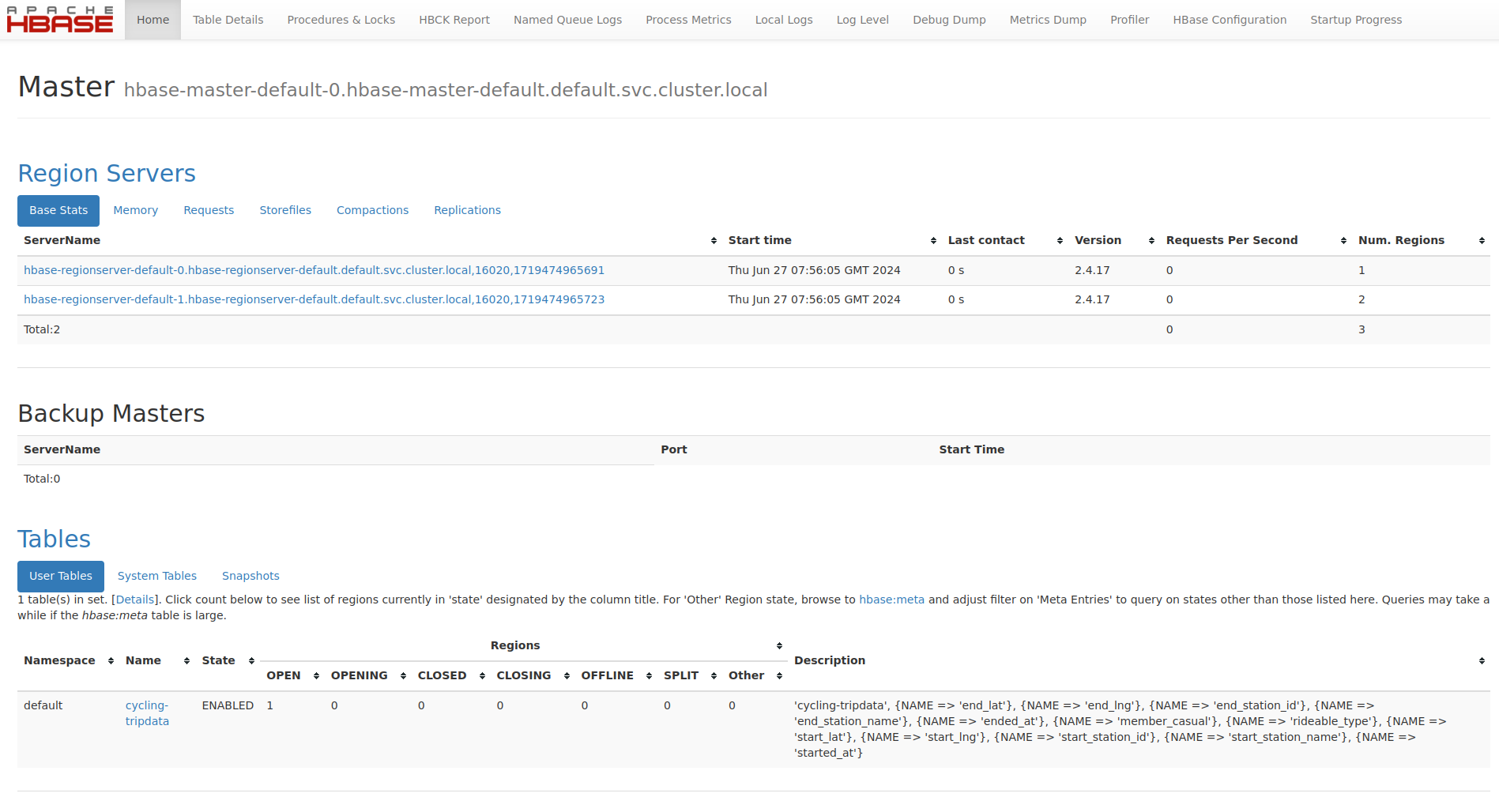

When connecting to the HBase master, opening a HBase shell and executing list, you will see the created table.

However, it’ll contain 0 rows at this point.

You can connect to the shell via:

$ kubectl exec -it hbase-master-default-0 -- bin/hbase shell

If you use k9s, you can drill into the hbase-master-default-0 pod and execute bin/hbase shell.

|

listTABLE

cycling-tripdataSecondly, we’ll use org.apache.hadoop.hbase.tool.LoadIncrementalHFiles (see bulk load docs) to import

the Hfiles into the table and ingest rows.

Now we will see how many rows are in the cycling-tripdata table:

count 'cycling-tripdata'See below for a partial result:

Current count: 1000, row: 02FD41C2518CCF81

Current count: 2000, row: 06022E151BC79CE0

Current count: 3000, row: 090E4E73A888604A

...

Current count: 82000, row: F7A8C86949FD9B1B

Current count: 83000, row: FA9AA8F17E766FD5

Current count: 84000, row: FDBD9EC46964C103

84777 row(s)

Took 13.4666 seconds

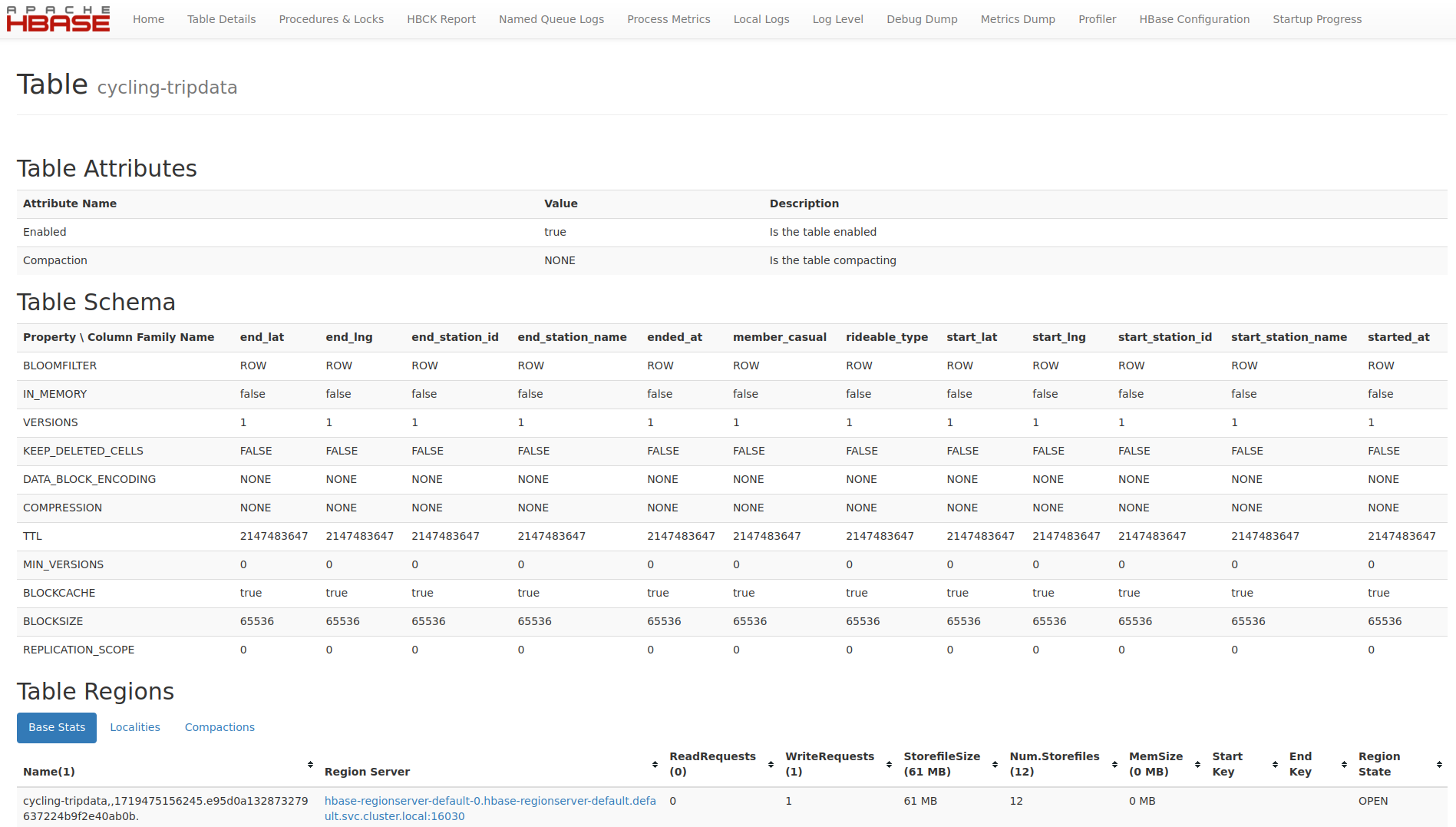

=> 84777Inspecting the Table

You can now use the table and the data. You can use all available HBase shell commands.

describe 'cycling-tripdata'Below, you’ll see the table description.

Table cycling-tripdata is ENABLED

cycling-tripdata

COLUMN FAMILIES DESCRIPTION

{NAME => 'end_lat', BLOOMFILTER => 'ROW', IN_MEMORY => 'false', VERSIONS => '1', KEEP_DELETED_CELLS => 'FALSE', DATA_BLOCK_ENCODING => 'NONE', COMPRESSION => 'NONE', TTL => 'FOREVER', MIN_VERSIONS => '0', BLOCKCACHE => 'true', BLOCKSIZE => '65536', REPLICATION_SCOPE => '0'}

{NAME => 'end_lng', BLOOMFILTER => 'ROW', IN_MEMORY => 'false', VERSIONS => '1', KEEP_DELETED_CELLS => 'FALSE', DATA_BLOCK_ENCODING => 'NONE', COMPRESSION => 'NONE', TTL => 'FOREVER', MIN_VERSIONS => '0', BLOCKCACHE => 'true', BLOCKSIZE => '65536', REPLICATION_SCOPE => '0'}

{NAME => 'end_station_id', BLOOMFILTER => 'ROW', IN_MEMORY => 'false', VERSIONS => '1', KEEP_DELETED_CELLS => 'FALSE', DATA_BLOCK_ENCODING => 'NONE', COMPRESSION => 'NONE', TTL => 'FOREVER', MIN_VERSIONS => '0', BLOCKCACHE => 'true', BLOCKSIZE => '65536', REPLICATION_SCOPE => '0'}

{NAME => 'end_station_name', BLOOMFILTER => 'ROW', IN_MEMORY => 'false', VERSIONS => '1', KEEP_DELETED_CELLS => 'FALSE', DATA_BLOCK_ENCODING => 'NONE', COMPRESSION => 'NONE', TTL => 'FOREVER', MIN_VERSIONS => '0', BLOCKCACHE => 'true', BLOCKSIZE => '65536', REPLICATION_SCOPE => '0'}

{NAME => 'ended_at', BLOOMFILTER => 'ROW', IN_MEMORY => 'false', VERSIONS => '1', KEEP_DELETED_CELLS => 'FALSE', DATA_BLOCK_ENCODING => 'NONE', COMPRESSION => 'NONE', TTL => 'FOREVER', MIN_VERSIONS => '0', BLOCKCACHE => 'true', BLOCKSIZE => '65536', REPLICATION_SCOPE => '0'}

{NAME => 'member_casual', BLOOMFILTER => 'ROW', IN_MEMORY => 'false', VERSIONS => '1', KEEP_DELETED_CELLS => 'FALSE', DATA_BLOCK_ENCODING => 'NONE', COMPRESSION => 'NONE', TTL => 'FOREVER', MIN_VERSIONS => '0', BLOCKCACHE => 'true', BLOCKSIZE => '65536', REPLICATION_SCOPE => '0'}

{NAME => 'rideable_type', BLOOMFILTER => 'ROW', IN_MEMORY => 'false', VERSIONS => '1', KEEP_DELETED_CELLS => 'FALSE', DATA_BLOCK_ENCODING => 'NONE', COMPRESSION => 'NONE', TTL => 'FOREVER', MIN_VERSIONS => '0', BLOCKCACHE => 'true', BLOCKSIZE => '65536', REPLICATION_SCOPE => '0'}

{NAME => 'start_lat', BLOOMFILTER => 'ROW', IN_MEMORY => 'false', VERSIONS => '1', KEEP_DELETED_CELLS => 'FALSE', DATA_BLOCK_ENCODING => 'NONE', COMPRESSION => 'NONE', TTL => 'FOREVER', MIN_VERSIONS => '0', BLOCKCACHE => 'true', BLOCKSIZE => '65536', REPLICATION_SCOPE => '0'}

{NAME => 'start_lng', BLOOMFILTER => 'ROW', IN_MEMORY => 'false', VERSIONS => '1', KEEP_DELETED_CELLS => 'FALSE', DATA_BLOCK_ENCODING => 'NONE', COMPRESSION => 'NONE', TTL => 'FOREVER', MIN_VERSIONS => '0', BLOCKCACHE => 'true', BLOCKSIZE => '65536', REPLICATION_SCOPE => '0'}

{NAME => 'start_station_id', BLOOMFILTER => 'ROW', IN_MEMORY => 'false', VERSIONS => '1', KEEP_DELETED_CELLS => 'FALSE', DATA_BLOCK_ENCODING => 'NONE', COMPRESSION => 'NONE', TTL => 'FOREVER', MIN_VERSIONS => '0', BLOCKCACHE => 'true', BLOCKSIZE => '65536', REPLICATION_SCOPE => '0'}

{NAME => 'start_station_name', BLOOMFILTER => 'ROW', IN_MEMORY => 'false', VERSIONS => '1', KEEP_DELETED_CELLS => 'FALSE', DATA_BLOCK_ENCODING => 'NONE', COMPRESSION => 'NONE', TTL => 'FOREVER', MIN_VERSIONS => '0', BLOCKCACHE => 'true', BLOCKSIZE => '65536', REPLICATION_SCOPE => '0'}

{NAME => 'started_at', BLOOMFILTER => 'ROW', IN_MEMORY => 'false', VERSIONS => '1', KEEP_DELETED_CELLS => 'FALSE', DATA_BLOCK_ENCODING => 'NONE', COMPRESSION => 'NONE', TTL => 'FOREVER', MIN_VERSIONS => '0', BLOCKCACHE => 'true', BLOCKSIZE => '65536', REPLICATION_SCOPE => '0'}Accessing the HBase web interface

|

Run |

The HBase web UI will give you information on the status and metrics of your HBase cluster. See below for the start page.

From the start page you can check more details, for example a list of created tables.

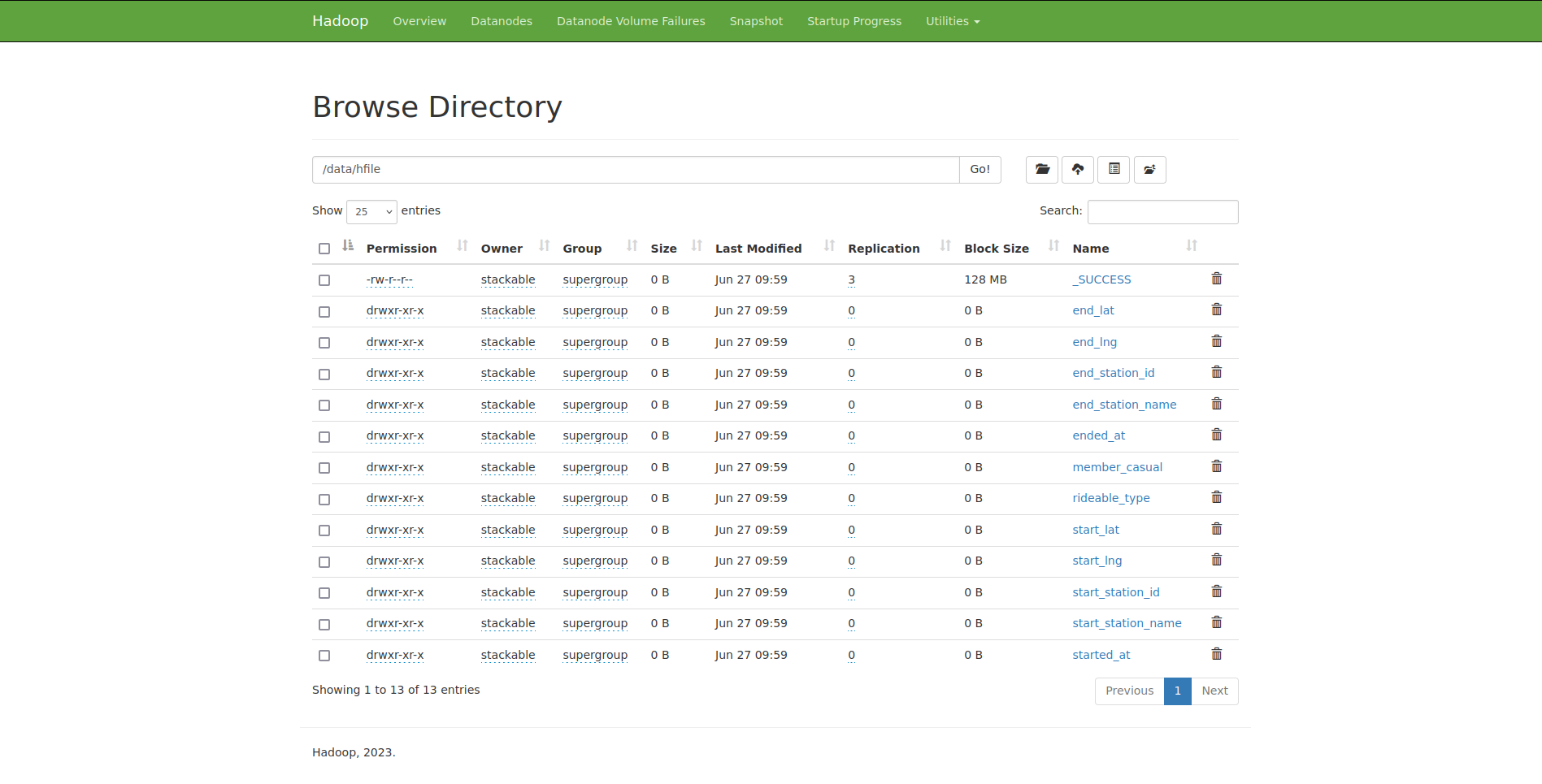

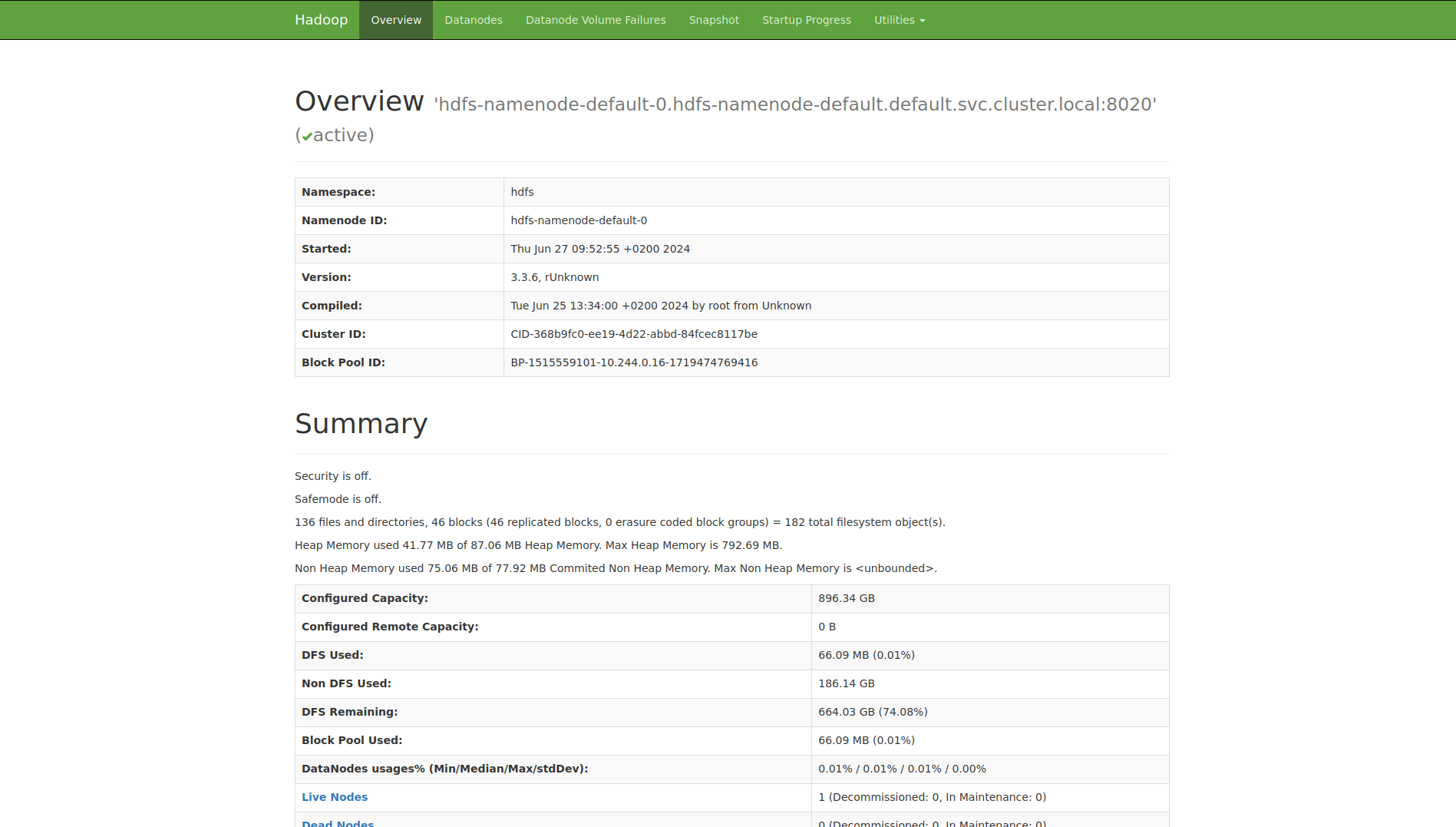

Accessing the HDFS web interface

You can also see HDFS details via a UI by running stackablectl stacklet list and following the link next to one of the namenodes.

Below you will see the overview of your HDFS cluster.

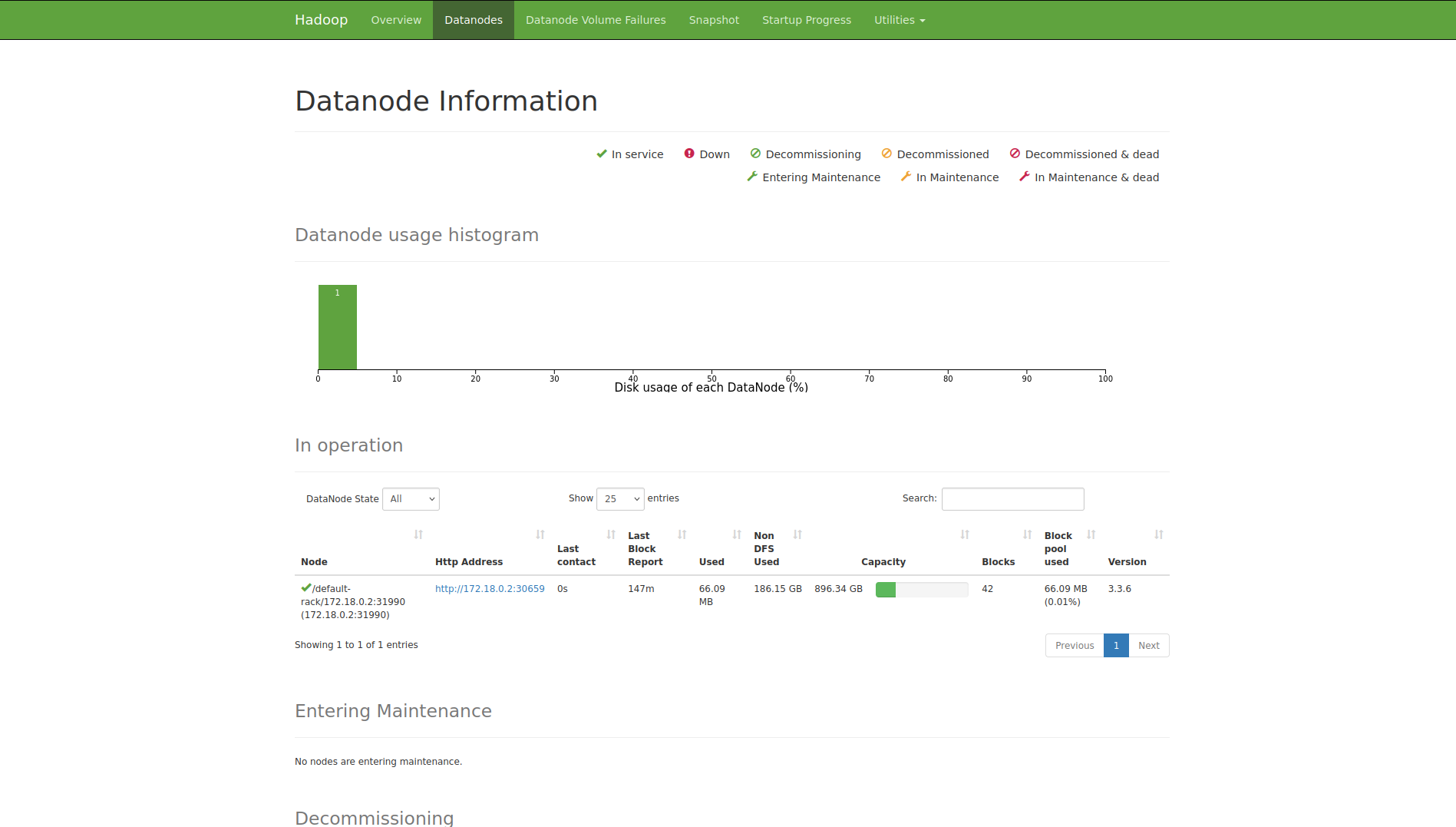

The UI will give you information on the datanodes via the Datanodes tab.

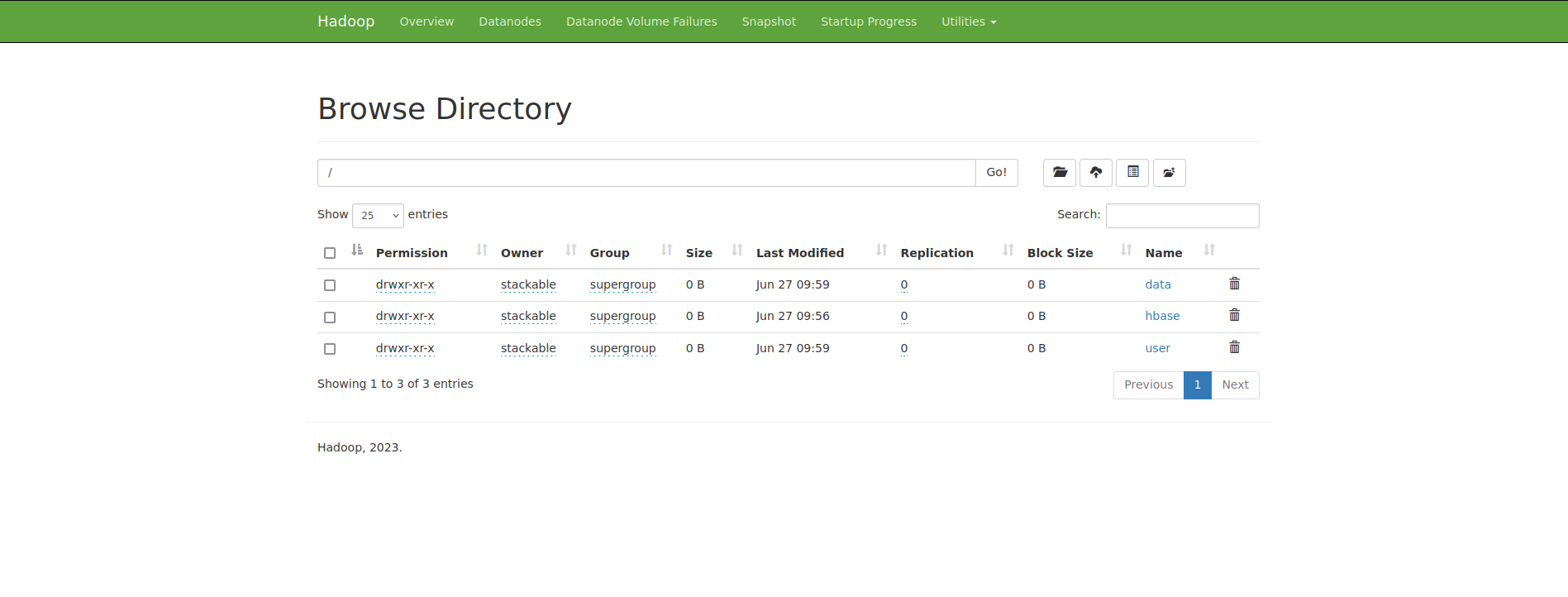

You can also browse the file system by clicking on the Utilities tab and selecting Browse the file system.

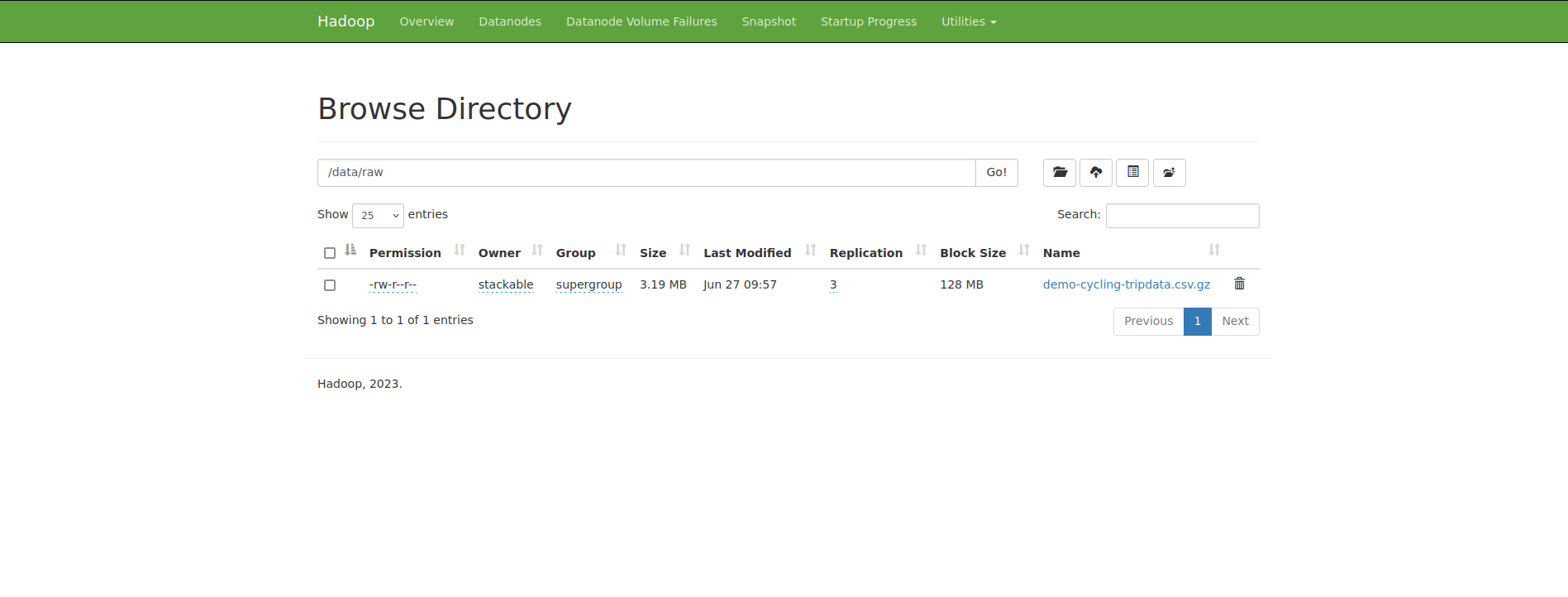

Navigate in the file system to the folder data and then the raw folder.

Here you can find the raw data from the distcp job.

Selecting the folder data and then hfile instead, gives you the structure of the Hfiles.